Robust Point Tracking with Epipolar Constraints

Geometry-driven point tracking that reduces long-horizon drift by enforcing epipolar consistency via post-processing refinement and weakly-supervised finetuning.

Overview

We present a geometry-driven, robust point-tracking framework that improves tracking accuracy in videos by enforcing epipolar constraints. While modern trackers (e.g., CoTracker) are strong, they can accumulate geometric drift over long sequences, producing correspondences that violate multi-view geometry.

We address this in two complementary ways:

- Post-processing (model-agnostic): An iterative refinement module that corrects correspondences frame-by-frame to satisfy epipolar constraints.

- Finetuning: A CoTracker finetuning strategy that adds a soft epipolar loss on rigid/static regions.

If you want the full report, see: PDF. Code: GitHub.

Contributions

- Iterative epipolar refinement that post-processes tracks from any point tracker using RANSAC-based fundamental matrix estimation + correspondence correction.

- Teacher–student weak supervision with a soft epipolar constraint that encourages geometric consistency while learning to separate static/dynamic motion.

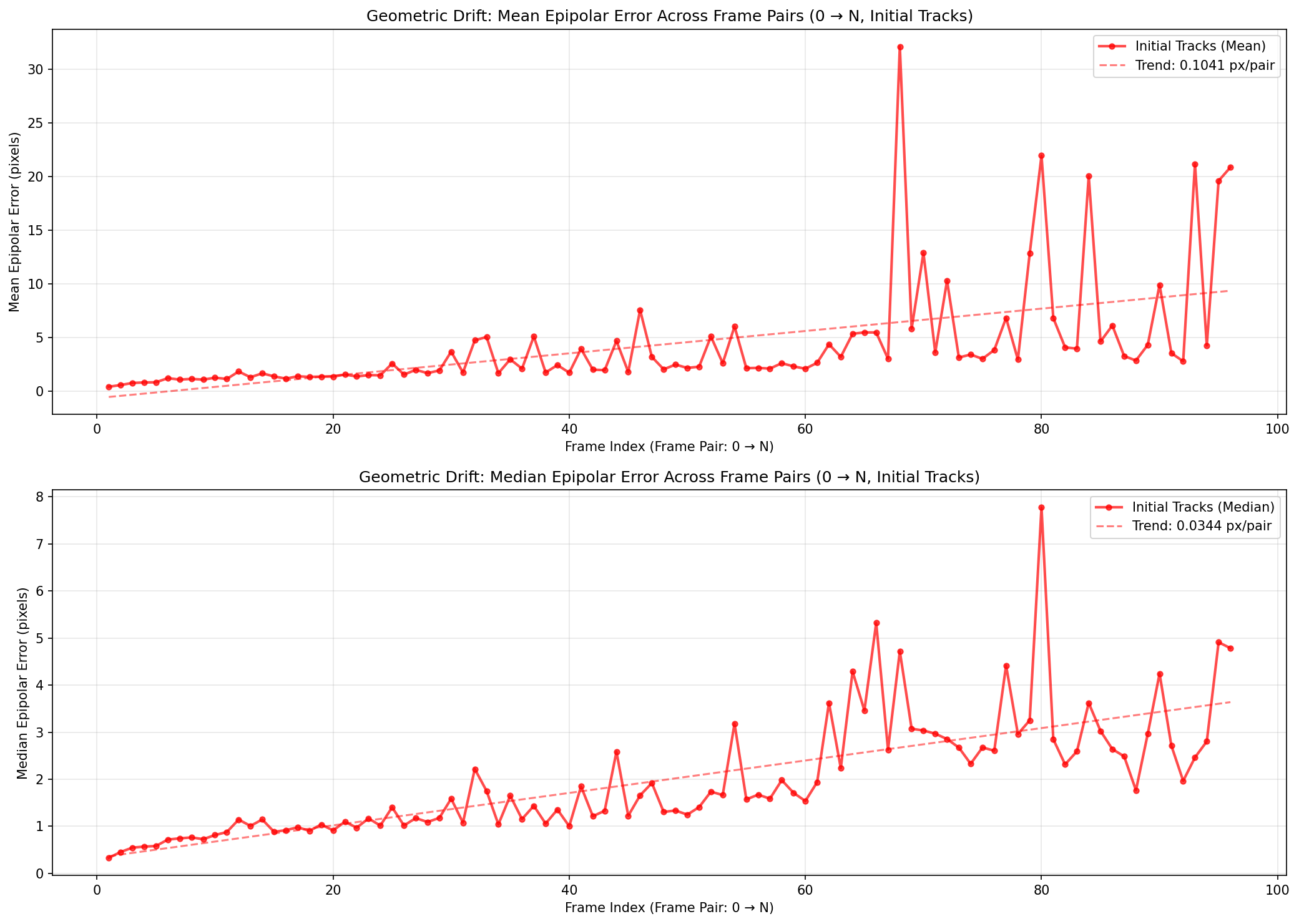

Motivation: geometric drift grows over time

For rigid/static scene points, correspondences between two frames must satisfy the epipolar constraint: [ x_t^\top F x_0 = 0 ] where (F) is the fundamental matrix. In practice, long-horizon trackers can drift and increasingly violate this constraint.

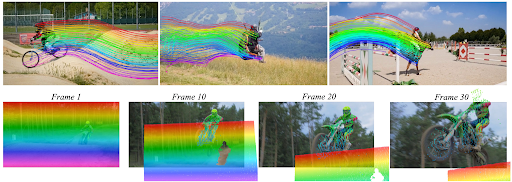

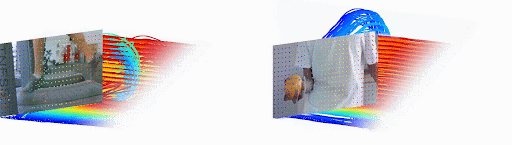

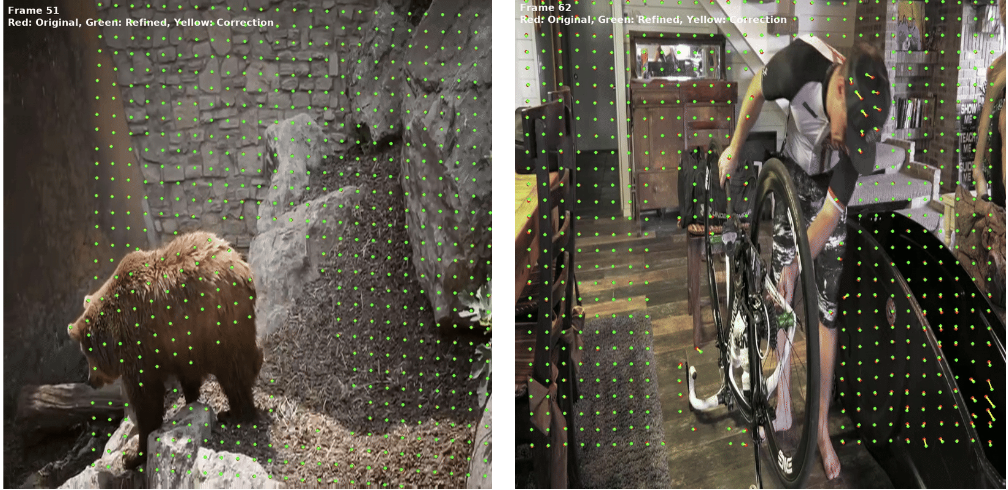

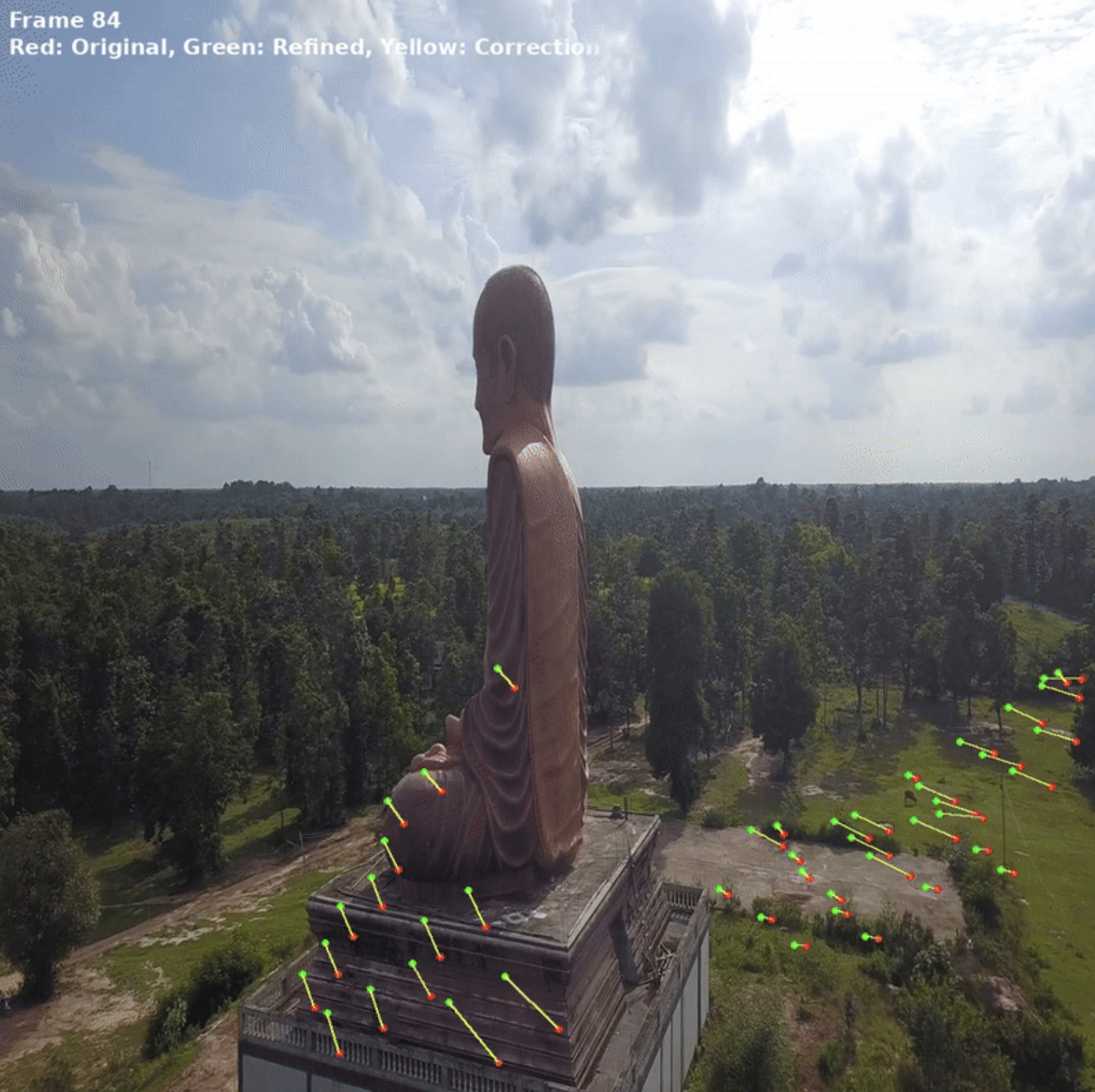

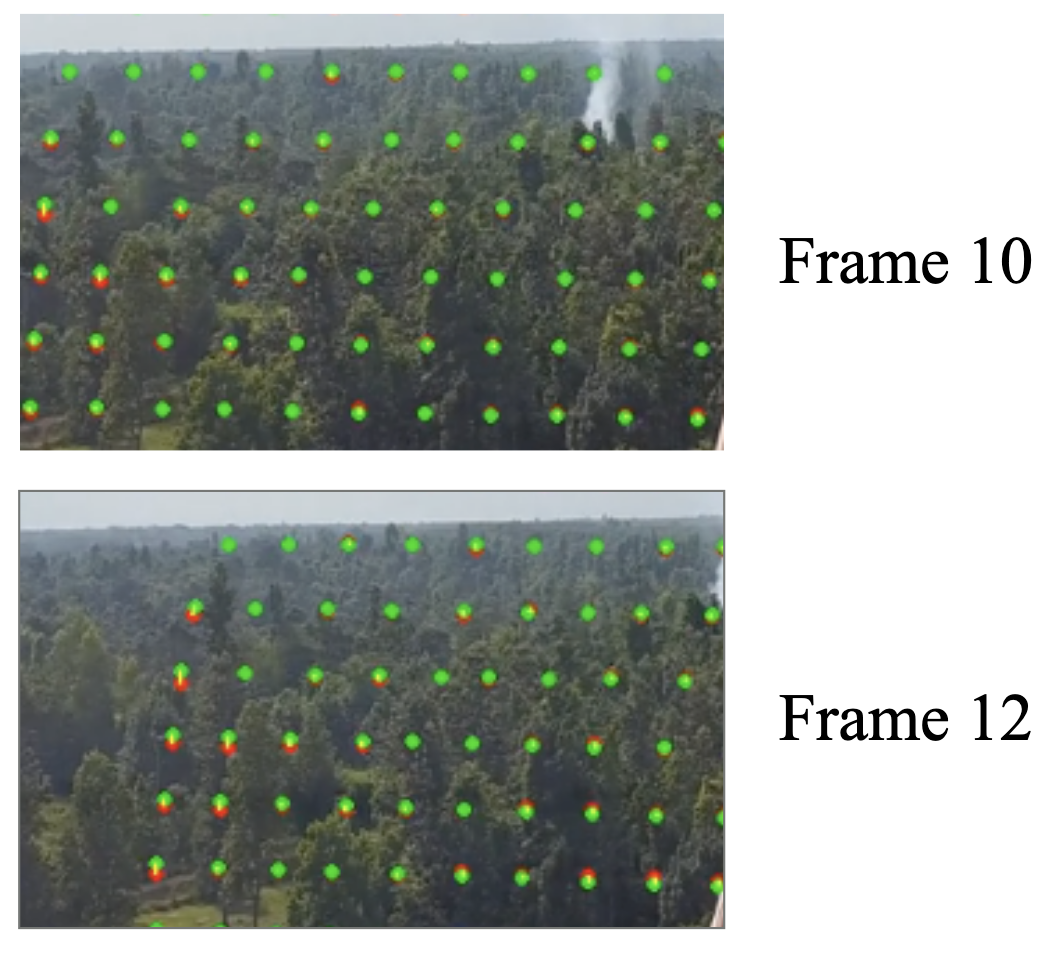

Figures

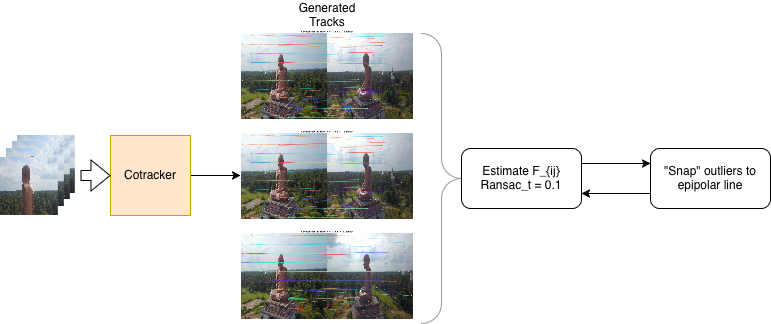

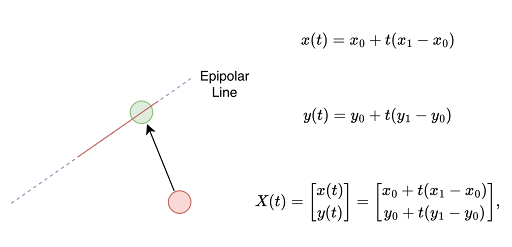

Method 1 — Post-processing: iterative epipolar refinement (model-agnostic)

Given tracks from any point tracker:

- Estimate a fundamental matrix (F_{0t}) between frame 0 and each later frame (t) using RANSAC on mutually-visible correspondences.

- Compute epipolar error (point-to-epipolar-line distance): [ d_{\text{epi}}(x_0, x_t)=\frac{|x_t^\top F x_0|}{\sqrt{(Fx_0)_1^2 + (Fx_0)_2^2}}. ]

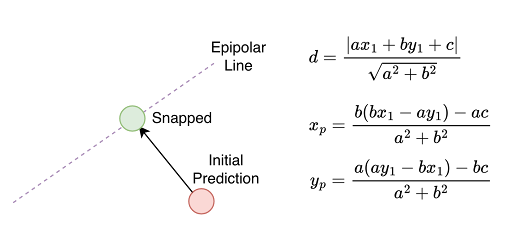

- Identify outliers and correct correspondences:

- Primary: SIFT-based local search in a 20px window around the epipolar line to recover a plausible match.

- Fallback: project the outlier point onto the epipolar line.

- Re-estimate (F) with tighter thresholds and iterate until convergence.

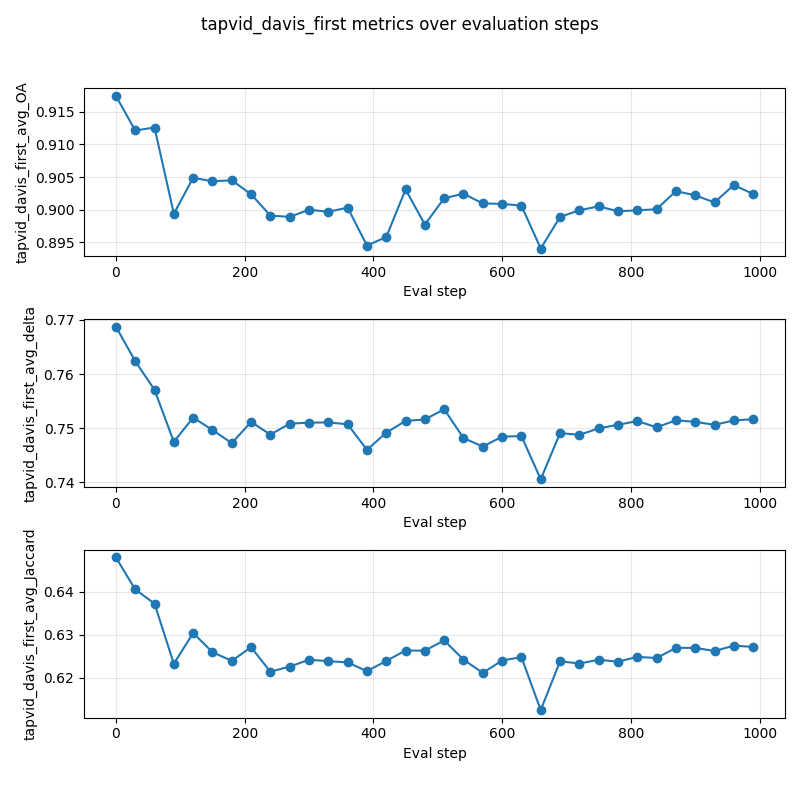

Method 2 — Finetuning: soft epipolar loss with teacher–student supervision

We also finetune CoTracker with a weakly-supervised epipolar objective on (approximately) static regions, inspired by ROMO-style motion masks.

High-level idea:

- A teacher produces tracks.

- A student is trained to stay close to the teacher on dynamic regions, while receiving an epipolar-consistency gradient on static regions.

- At inference time, we drop masks and hope the student internalizes the geometry prior.

Evaluation setup & metrics

We evaluate on long sequences (80+ frames) with both rigid and non-rigid motion. In addition to standard TAP‑Vid DAVIS metrics, we report:

- Mean / Median epipolar error (px): lower means more multi-view consistent tracks.

Results

Across our experiments, enforcing geometry often improves epipolar consistency (sometimes at a small cost to standard tracking metrics).

| Method | δ_visavg (%) | OA (%) | AJ (%) | Mean Error (px) | Median Error (px) |

|---|---|---|---|---|---|

| PIPs | 64.0 | 77.0 | 63.5 | 4.87 | 3.42 |

| TAPIR | 62.9 | 88.0 | 73.3 | 3.95 | 2.76 |

| CoTracker3 (baseline) | 77.3 | 91.8 | 64.5 | 3.24 | 2.18 |

| CoTracker3 + Finetune (ours) | 75.1 | 90.5 | 62.7 | 2.41 | 1.58 |

| Ours + Post-processing | 75.8 | 90.3 | 63.1 | 1.31 | 0.89 |

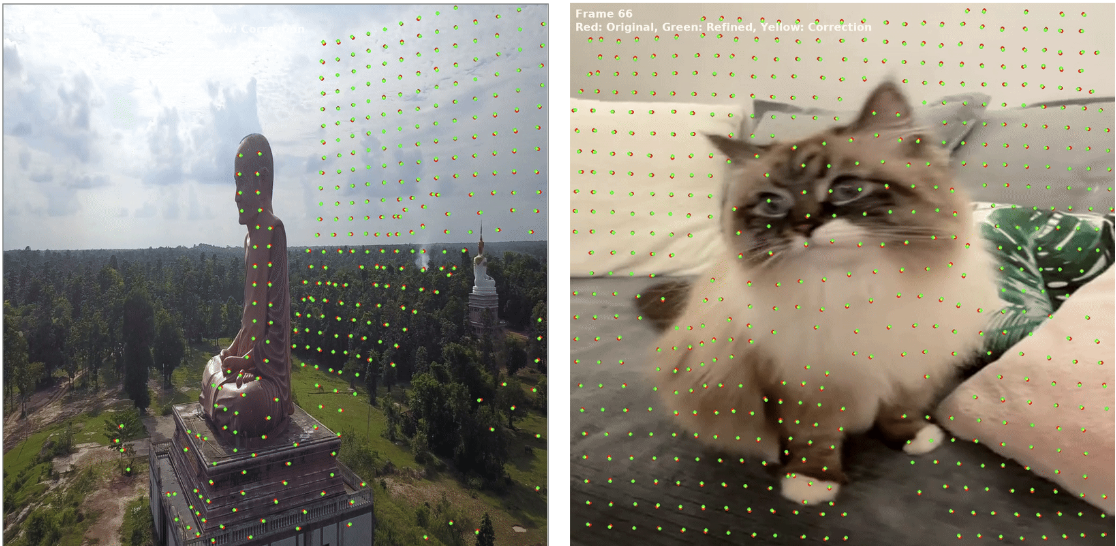

Qualitative results & failure cases

We generally observe geometrically consistent corrections in static regions, but those corrections can sometimes leak into dynamic regions and distort motion.

Links

- Code:

https://github.com/adithyaknarayan/EpiTrack - Report (PDF):

/assets/pdf/Geom_Final_report-2.pdf